2. Background Theory¶

This section aims to provide the user with a basic review of the physics, discretization, and optimization techniques used to solve the frequency domain electromagnetics problem. It is assumed that the user has some background in these areas. For further reading see ( [Nab91] ).

Important

- This code uses the following coordinate system and Fourier convention to solve Maxwell’s equations:

X = Easting, Y = Northing, Z +ve downward (left-handed)

An \(e^{-i \omega t}\) Fourier convention

2.1. Fundamental Physics¶

Maxwell’s equations provide the starting point from which an understanding of how electromagnetic fields can be used to uncover the substructure of the Earth. In the frequency domain Maxwell’s equations are:

where \(\mathbf{E}\) and \(\mathbf{H}\) are the electric and magnetic fields, \(\mathbf{s}\) is some external source and \(e^{-i\omega t}\) is suppressed. Symbols \(\mu\), \(\sigma\) and \(\omega\) are the magnetic permeability, conductivity, and angular frequency, respectively. This formulation assumes a quasi-static mode so that the system can be viewed as a diffusion equation (Weaver, 1994; Ward and Hohmann, 1988 in [Nab91]). By doing so, some difficulties arise when solving the system;

the curl operator has a non-trivial null space making the resulting linear system highly ill-conditioned

the conductivity \(\sigma\) varies over several orders of magnitude

the fields can vary significantly near the sources, but smooth out at distance thus high resolution is required near sources

2.2. Octree Mesh¶

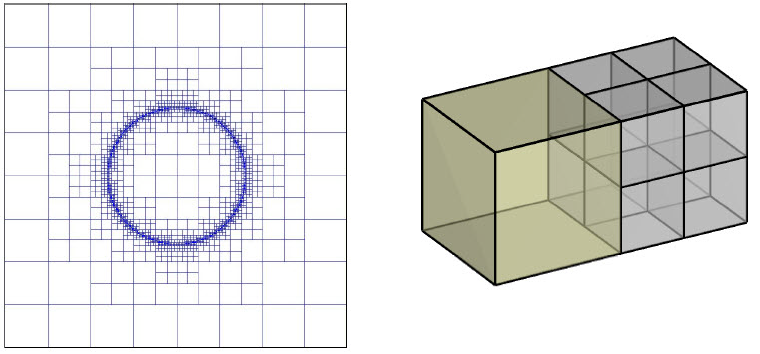

By using an Octree discretization of the earth domain, the areas near sources and likely model location can be give a higher resolution while cells grow large at distance. In this manner, the necessary refinement can be obtained without added computational expense. The figure below shows an example of an Octree mesh, with nine cells, eight of which are the base mesh minimum size.

When working with Octree meshes, the underlying mesh is defined as a regular 3D orthogonal grid where the number of cells in each dimension are \(2^{n_1} \times 2^{n_2} \times 2^{n_3}\). The cell widths for the underlying mesh are \(h_1, \; h_2, \; h_3\), respectively. This underlying mesh is the finest possible, so that larger cells have side lengths which increase by powers of 2. The idea is that if the recovered model properties change slowly over a certain volume, the cells bounded by this volume can be merged into one without losing the accuracy in modeling, and are only refined when the model begins to change rapidly.

2.3. Discretization of Operators¶

The operators div, grad, and curl are discretized using a finite volume formulation. Although div and grad do not appear in (2.1), they are required for the solution of the system. The divergence operator is discretized in the usual flux-balance approach, which by Gauss’ theorem considers the current flux through each face of a cell. The nodal gradient (operates on a function with values on the nodes) is obtained by differencing adjacent nodes and dividing by edge length. The discretization of the curl operator is computed similarly to the divergence operator by utilizing Stokes theorem by summing the magnetic field components around the edge of each face. Please see [HHG+12] for a detailed description of the discretization process.

2.4. Forward Problem¶

2.4.1. Direct Solver Approach¶

To solve the forward problem, we must first discretize and solve for the fields in Eq. (2.1), where \(e^{-i\omega t}\) is suppressed. Using finite volume discretization, the electric fields on cell edges (\(\mathbf{u_e}\)) are obtained by solving the following system at every frequency:

where \(\mathbf{s_e}\) is a source term defined on cell edges, \(\mathbf{C}\) is the curl operator and:

where \(\mathbf{V}\) is a diagonal matrix containing all cell volumes, \(\mathbf{A_{f2c}}\) averages from faces to cell centres and \(\mathbf{A_{e2c}}\) averages from edges to cell centres. The magnetic permeabilities and conductivities for each cell are contained within vectors \(\boldsymbol{\mu}\) and \(\boldsymbol{\sigma}\), respectively.

Once the electric field on cell edges has been computed, the electric (\(\mathbf{E}\)) and magnetic (\(\mathbf{H}\)) fields at observation locations can be obtain via the following:

where \(\mathbf{Q_c}\) represents the appropriate projection matrix from cell centers to a particular receiver (Ex, Ey, Ez, Hx, Hy or Hz). If we let

then (2.3) can be written as:

2.4.2. Iterative Solver Approach¶

For this approach we decompose the electric field as follows:

where \(\mathbf{u_e}\) is the fields on cell edges, \(\mathbf{a}\) is the vector potential, \(\phi\) is the scalar potential and \(\mathbf{G}\) is the discrete gradient operator. To compute the electric fields, the BiCGstab algorithm is used to solve the following system:

where

is a matrix that is added to the (1,1) block of Eq. (2.7) to improve the stability of the system and \(\mathbf{A}\) is given by Eq. (2.4). Once Eq. (2.7) is solved, Eq. (2.6) is used to obtain the electric fields on cell edges and Eq. (2.3) computes the fields at the receivers.

Adjustable parameters for solving Eq. (2.7) iteratively using BiCGstab are defined as follows:

tol_bicg: relative tolerance (stopping criteria) when solver is used during forward modeling; i.e. solves Eq. (2.2). Ideally, this number is very small (~1e-10).

tol_ipcg_bicg: relative tolerance (stopping criteria) when solver needed in computation of \(\delta m\) during Gauss Newton iteration; i.e. must solve Eq. (2.10) to solve Eq. (2.16). This value does not need to be as large as the previous parameter (~1e-5).

max_it_bicg: maximum number of BICG iterations (~100)

2.4.3. Total vs Secondary Field¶

To compute the total field or the secondary field, we define a different right-hand-side for Eq. (2.2) (direct solver) or Eq. (2.7) (iterative solver).

Total Field Computation:

For total field data, the analytic source current \(\mathbf{s}\) defined in Eq. (2.1) is interpolated to cell edges by a function \(f(\mathbf{s})\). It is then multiplyied by an inner-product matrix \(\mathbf{M_e}\) that lives on cell-edges. Thus for the right-hand-side in Eq. (2.2) or Eq. (2.7), the discrete source term \(\mathbf{s_e}\) is given by:

where

Secondary Field Computation (Direct Solver):

For secondary field data, we compute the analytic electric field in a homogeneous full-space due to a current loop or wire. We do this for a background conductivity \(\sigma_0\) and permeability \(\mu_0\). The analytic solution for our source is computed by taking the analytic solution for an electric dipole and integrating over the path of the wire/loop, i.e.:

For an electric dipole at the origin and oriented along the \(\hat{x}\) direction, the electric field in a homogeneous full-space is given by:

where

Once the analytic background field is computed on cell edges, we construct the linear operator \(\mathbf{A}(\mathbf{\sigma_0})\) from Eq. (2.4) using the background conductivity and permeability. Then we use \(\mathbf{A}(\mathbf{\sigma_0})\) and \(\mathbf{u_0}\) to compute the right-hand-side that is used to solve Eq. (2.2)

Secondary Field Computation (Iterative Solver):

A similar approach is taken in this case. Here, we must compute the analytic vector potential \(\mathbf{a}\) and scalar potential \(\phi\) for the background conductivity and permeabiltiy. The matrix in Eq. (2.7) is computed for the background conductivity and permeabiltiy. The linear operator and analytic potentials are then used to compute the right-hand side.

2.5. Sensitivity¶

Electric and magnetic field observations are split into their real and imaginary components. Thus the data at a particular frequency for a particular reading is organized in a vector of the form:

where \(\prime\) denotes real components and \(\prime\prime\) denotes imaginary components. To determine the sensitivity of the data (i.e. (2.9)) with respect to the model (\(\boldsymbol{\sigma}\)), we must compute:

where the conductivity model \(\boldsymbol{\sigma}\) is real-valued. To differentiate \(E^\prime_x\) (or any other field component) with respect to the model, we require the derivative of the electric fields on cell edges (\(\mathbf{u_e}\)) with respect to the model. This is given by:

Note

Eq. (2.10) defines the sensitivities when using the direct solver formulation. Computations involving the sensitivities will differ if the iterative solver approach is used.

2.6. Inverse Problem¶

We are interested in recovering the conductivity distribution for the Earth. However, the numerical stability of the inverse problem is made more challenging by the fact rock conductivities can span many orders of magnitude. To deal with this, we define the model as the log-conductivity for each cell, e.g.:

The inverse problem is solved by minimizing the following global objective function with respect to the model:

where \(\phi_d\) is the data misfit, \(\phi_m\) is the model objective function and \(\beta\) is the trade-off parameter. The data misfit ensures the recovered model adequately explains the set of field observations. The model objective function adds geological constraints to the recovered model. The trade-off parameter weights the relative emphasis between fitting the data and imposing geological structures.

2.6.1. Data Misfit¶

Here, the data misfit is represented as the L2-norm of a weighted residual between the observed data (\(d_{obs}\)) and the predicted data for a given conductivity model \(\boldsymbol{\sigma}\), i.e.:

where \(W_d\) is a diagonal matrix containing the reciprocals of the uncertainties \(\boldsymbol{\varepsilon}\) for each measured data point, i.e.:

Important

For a better understanding of the data misfit, see the GIFtools cookbook .

2.6.2. Model Objective Function¶

Due to the ill-posedness of the problem, there are no stable solutions obtained by freely minimizing the data misfit, and thus regularization is needed. The regularization uses penalties for both smoothness, and likeness to a reference model \(m_{ref}\) supplied by the user. The model objective function is given by:

where \(\alpha_s, \alpha_x, \alpha_y\) and \(\alpha_z\) weight the relative emphasis on minimizing differences from the reference model and the smoothness along each gradient direction. And \(w_s, w_x, w_y\) and \(w_z\) are additional user defined weighting functions.

An important consideration comes when discretizing the regularization onto the mesh. The gradient operates on cell centered variables in this instance. Applying a short distance approximation is second order accurate on a domain with uniform cells, but only \(\mathcal{O}(1)\) on areas where cells are non-uniform. To rectify this a higher order approximation is used ([HHG+12]). The second order approximation of the model objective function can be expressed as:

where the regularizer is given by:

The Hadamard product is given by \(\odot\), \(\mathbf{v_x}\) is the volume of each cell averaged to x-faces, \(\mathbf{w_x}\) is the weighting function \(w_x\) evaluated on x-faces and \(\mathbf{G_x}\) computes the x-component of the gradient from cell centers to cell faces. Similarly for y and z.

If we require that the recovered model values lie between \(\mathbf{m_L \preceq m \preceq m_H}\) , the resulting bounded optimization problem we must solve is:

A simple Gauss-Newton optimization method is used where the system of equations is solved using ipcg (incomplete preconditioned conjugate gradients) to solve for each G-N step. For more information refer again to [HHG+12] and references therein.

2.6.3. Inversion Parameters and Tolerances¶

2.6.3.1. Cooling Schedule¶

Our goal is to solve Eq. (2.15), i.e.:

but how do we choose an acceptable trade-off parameter \(\beta\)? For this, we use a cooling schedule. This is described in the GIFtools cookbook . The cooling schedule can be defined using the following parameters:

beta_max: The initial value for \(\beta\)

beta_factor: The factor at which \(\beta\) is decrease to a subsequent solution of Eq. (2.15)

beta_min: The minimum \(\beta\) for which Eq. (2.15) is solved before the inversion will quit

Chi Factor: The inversion program stops when the data misfit \(\phi_d \leq N \times Chi \; Factor\), where \(N\) is the number of data observations

2.6.3.2. Gauss-Newton Update¶

For a given trade-off parameter (\(\beta\)), the model \(\mathbf{m}\) is updated using the Gauss-Newton approach. Because the problem is non-linear, several model updates may need to be completed for each \(\beta\). Where \(k\) denotes the Gauss-Newton iteration, we solve:

using the current model \(\mathbf{m}_k\) and update the model according to:

where \(\mathbf{\delta m}_k\) is the step direction, \(\nabla \phi_k\) is the gradient of the global objective function, \(\mathbf{H}_k\) is an approximation of the Hessian and \(\alpha\) is a scaling constant. This process is repeated until any of the following occurs:

The gradient is sufficiently small, i.e.:

\[\| \nabla \phi_k \|^2 < tol \_ nl\]The smallest component of the model perturbation its small in absolute value, i.e.:

\[\textrm{max} ( |\mathbf{\delta m}_k | ) < mindm\]A max number of GN iterations have been performed, i.e.

\[k = iter \_ per \_ beta\]

2.6.3.3. Gauss-Newton Solve¶

Here we discuss the details of solving Eq. (2.16) for a particular Gauss-Newton iteration \(k\). Using the data misfit from Eq. (2.12) and the model objective function from Eq. (2.14), we must solve:

where \(\mathbf{J}\) is the sensitivity of the data to the current model \(\mathbf{m}_k\). The system is solved for \(\mathbf{\delta m}_k\) using the incomplete-preconditioned-conjugate gradient (IPCG) method. This method is iterative and exits with an approximation for \(\mathbf{\delta m}_k\). Let \(i\) denote an IPCG iteration and let \(\mathbf{\delta m}_k^{(i)}\) be the solution to (2.18) at the \(i^{th}\) IPCG iteration, then the algorithm quits when:

the system is solved to within some tolerance and additional iterations do not result in significant increases in solution accuracy, i.e.:

\[\| \mathbf{\delta m}_k^{(i-1)} - \mathbf{\delta m}_k^{(i)} \|^2 / \| \mathbf{\delta m}_k^{(i-1)} \|^2 < tol \_ ipcg\]a maximum allowable number of IPCG iterations has been completed, i.e.:

\[i = max \_ iter \_ ipcg\]